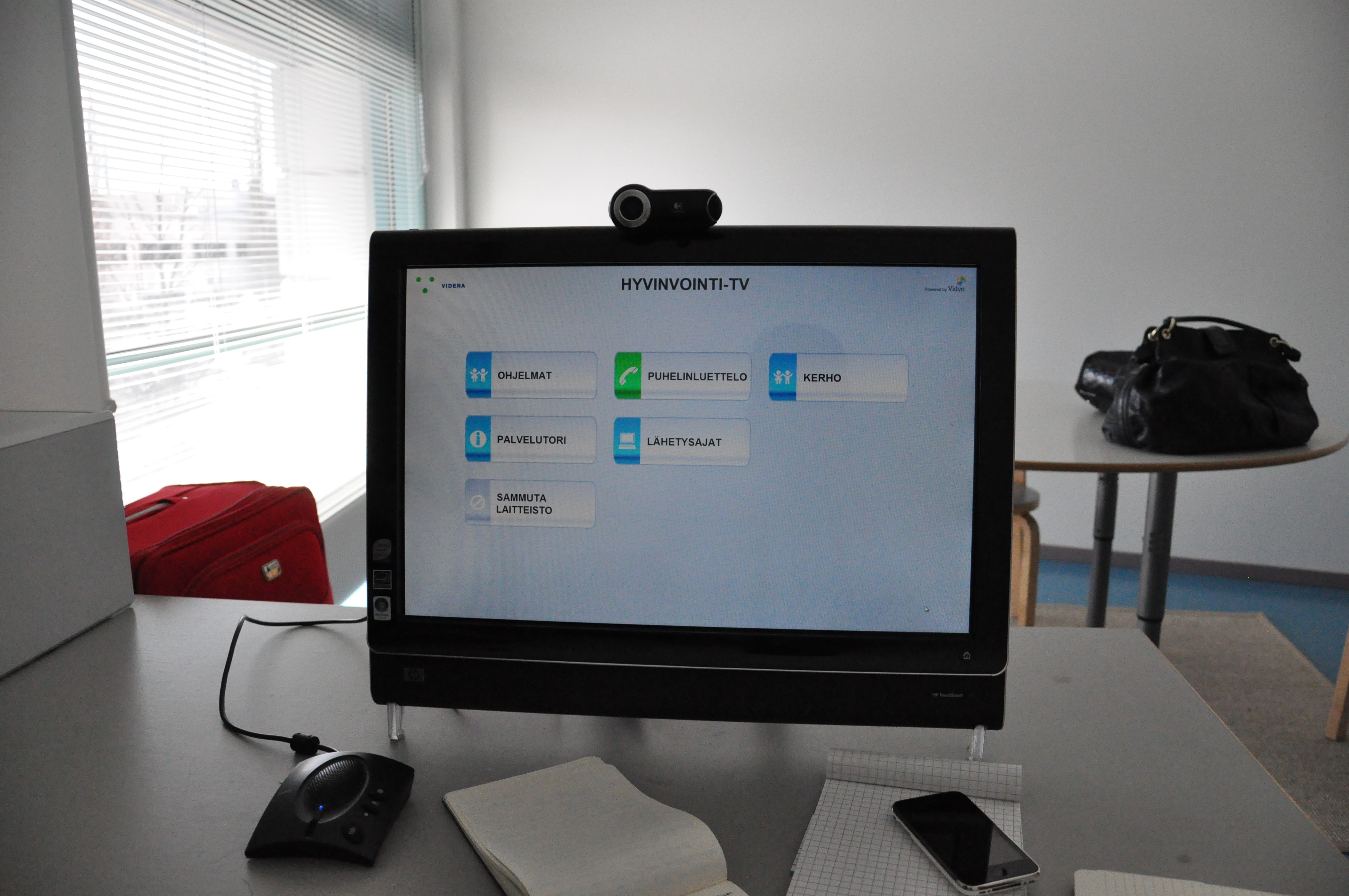

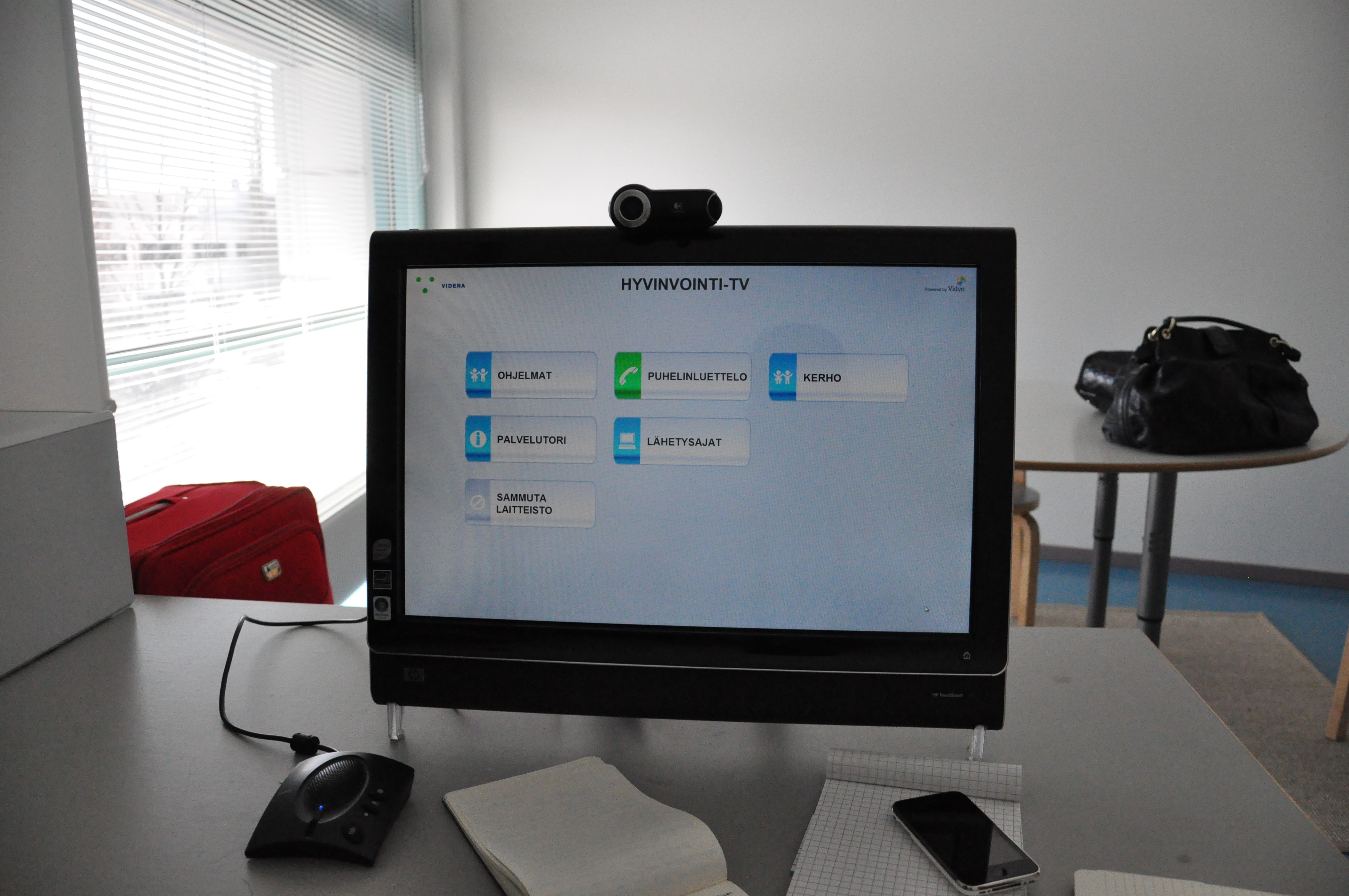

The CaringTV interface during a program broadcast from their studio in Laurea University, Helsinki.

Aino lives alone in a small town in Finland.

She is 72 years old. The nearest doctor is more than five miles away and she doesn’t have a car.

Even though she doesn’t have a computer or use email, Aino does have a touchscreen (equipped with a video camera) that connects her to the world.

It’s something called CaringTV.

“It’s a lifeline for every day,” says Aino, who only speaks Finnish. “If they remove the device, I would feel like something was missing.”

CaringTV is a technological platform for the elderly, developed by Laurea University of Applied Sciences, TDC Song, Videra Oy and Espoo City. Its goal? To deliver welfare services to Finland’s growing elderly population through an ordinary TV set — a device used commonly by seniors.

Every day at 10 a.m. and 1 p.m., Aino sits in front of a touchscreen and video camera to tune into CaringTV’s interactive programs. These programs range from physical exercises to singing, from spiritual discussions to trivia games.

The above set-up is provided to every CaringTV client — they can tune into programs or call friends using their individual touchscreens at home.

The CaringTV interface also offers “point-to-point” video calling for clients. To access her friend list, all Aino has to do is tap “Puhelinluettelo” on her screen and she can connect to anyone immediately.

Although CaringTV’s main purpose is focused on improving the quality of life for Finland’s elderly population, the social aspect is by no means a secondary goal.

“The social contact is important, almost the main point in CaringTV,” says researcher Katja Tikkanen.

According to Tikkanen, many of the CaringTV clients (previously strangers) have transitioned from virtual friendships to friendships in the real world. The interface serves as a means of support and connection for Aino, who doesn’t have to live totally alone anymore.

“After watching the programs, we will usually stay on the channel to chat with each other,” she says.

Katie Zhu (SONIC) received a grant from the Medill School of Journalism to travel to Helsinki and investigate living labs and their societal implications. She plans to research the CaringTV network further in her work at SONIC and investigate the questions of how support is provided and how friendships are developed among clients.